Activation Functions in Neural Networks: A CI/CD Pipeline Analogy

A decision making process of activating the neurons to process the input data.

Activation functions in a neural network play a major role in moving from linear to non-linear progression. To understand, let's compare with an analogy which is by far popular among DevOps and Cloud engineers, which is the traditional CI/CD pipeline approach.

Input Layer: The Starting Point

Traditionally, a code which is modified in the repository, when set up with a GIT hook, will trigger the pipeline to start to test/build/deploy. Similarly, in an Artificial Neural Network (ANN), the data first enters via the input layer.

Hidden Layers: The Processing Stage

Like a new/modified code which gets built during the CI/CD pipeline process, the input data entered in an ANN, will get processed via the hidden or middle layers based on the weights and biases applied.

Activation Functions: The Decision Makers

In the CI/CD pipeline, the process moves from one stage to another, based on the outcome in each step. Likewise, the information that was calculated in its respective layer must continue to the next, and so on and so forth depending on the activated neurons.

The most important thing is the activation functions that allow the decision-making process of activating the neurons to process the input data.

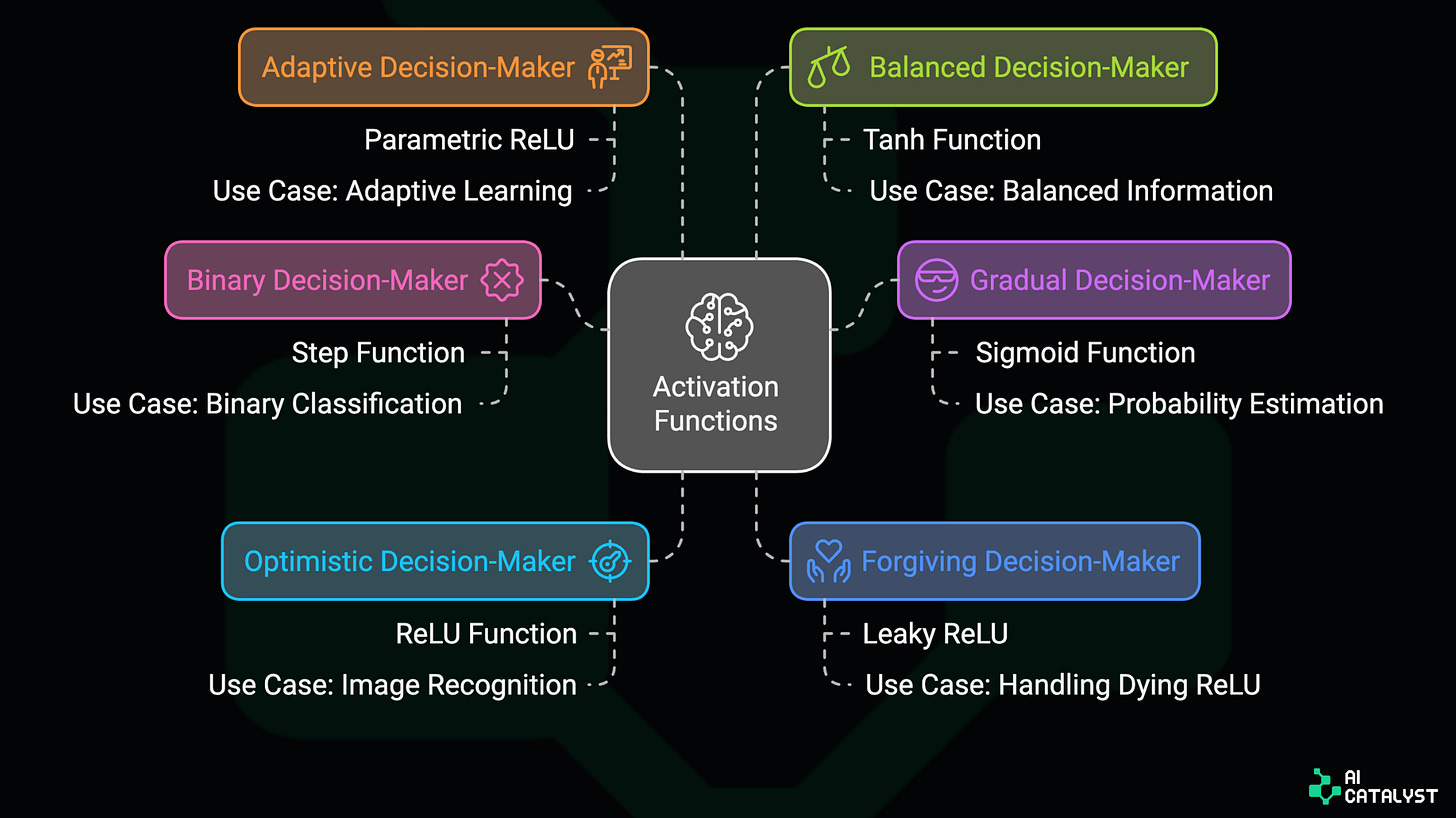

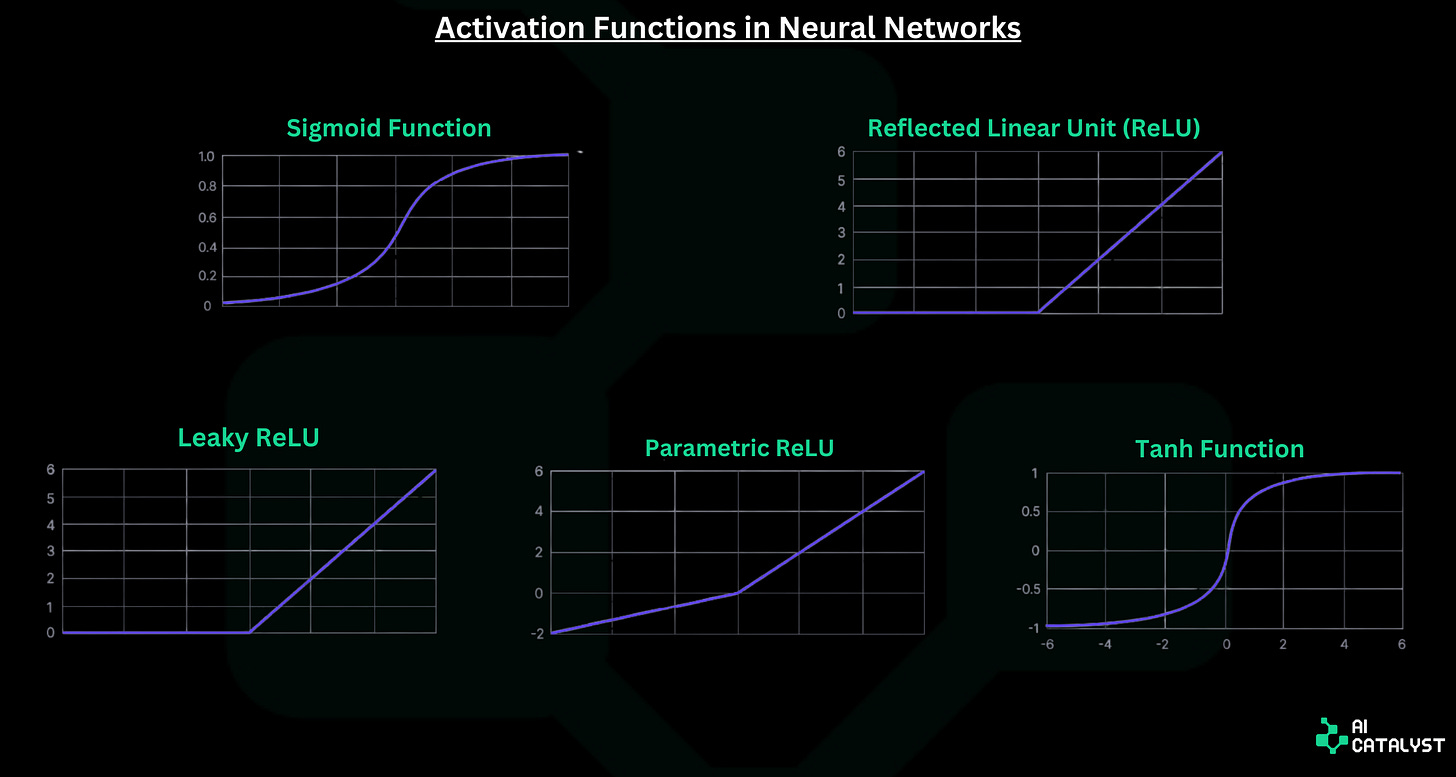

Types of Activation Functions

Let's explore different activation functions and how they relate to CI/CD pipeline strategies.

The Binary Decision-Maker (Step Function):

In case of failure in even one step, the CI/CD pipeline fails — which means the entire pipeline fails. Likewise, the neurons do not compute nor trigger using the previous layer output from the (ANN). That is, the "step function" does not process the input data and fails.

The neuron activates (outputs 1) when the input exceeds a threshold.

Otherwise, it does nothing (yields 0).

Use case: Useful for binary classification tasks but lacks nuance for complex problems.

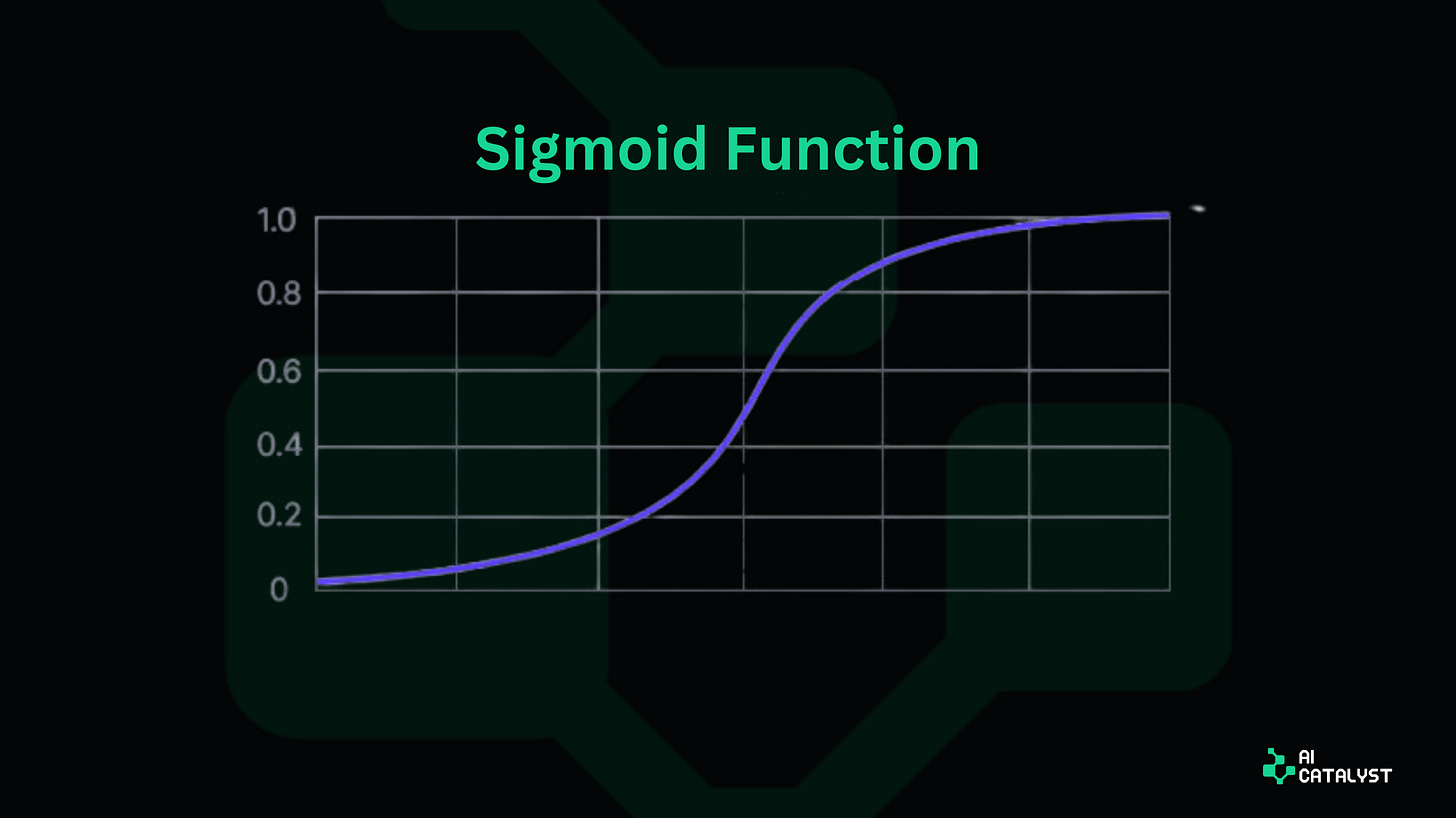

The Gradual Decision-Maker (Sigmoid Function):

This function assigns a confidence score between 0 and 1 to each stage. Higher scores mean the code is more likely to proceed to the next stage. Likewise, each network layer in the ANN is assigned a specific score and the movement of the process to the next layer depends on the higher score. The higher the score, the higher the input data, the more likely moves on to the next layer.

Maps any input to a value between 0 and 1.

Higher scores indicate a higher likelihood of neuron activation.

Use case: Ideal for probability estimations and binary classification problems like spam detection.

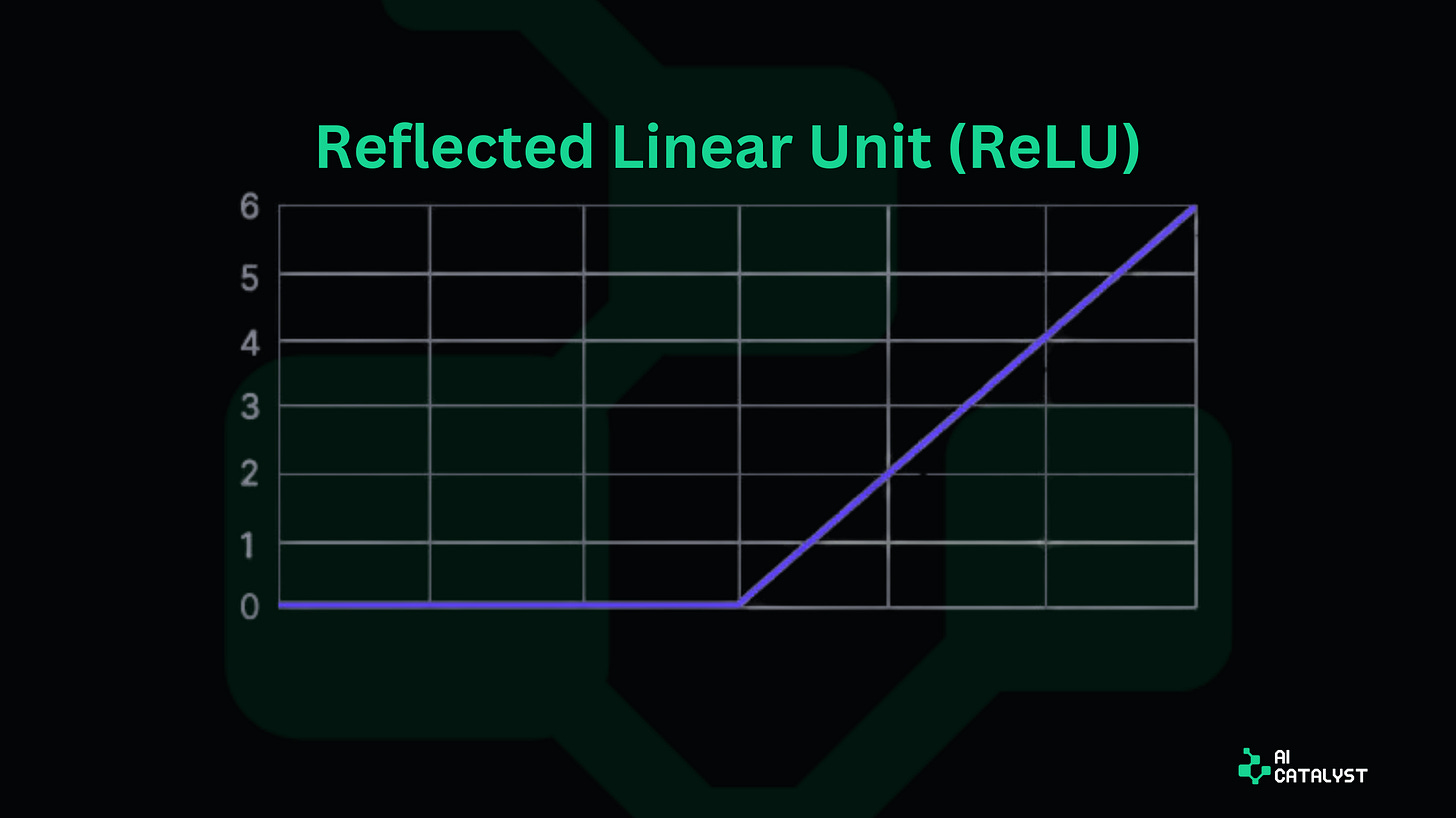

The Optimistic Decision-Maker (ReLU Function):

Positive outcomes are all this function cares about. All negative outcomes are ignored. No processing takes place except on the positive hit obtained from the last layer of ANN.

Passes positive inputs through unchanged.

Turns negative inputs into zero.

Use case: Widely used in deep learning models, especially convolutional neural networks for image recognition tasks.

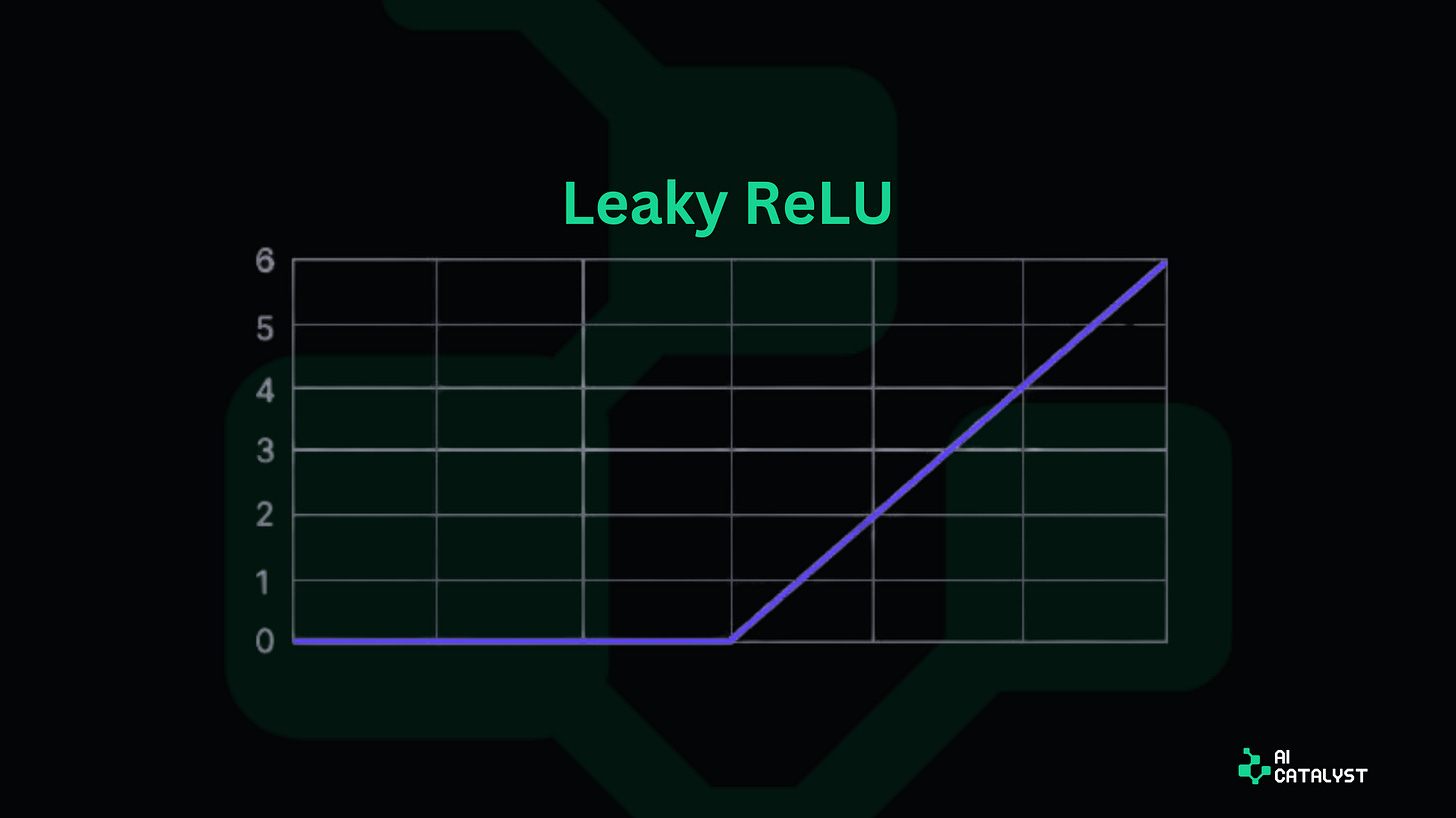

The Gradual Ramp-Up Decision-Maker (Leaky ReLU):

Similar to a CI/CD pipeline which gracefully handles small failures and progresses to the next stage, in the context of a neural network:

Positive inputs are very relevant features, they are fully propagated.

Instead of completely disregarding negative inputs and setting them to zero, this function allows them to pass through but to a very small extent, retaining some useful information.

Use case: Helps prevent the "dying ReLU" problem where neurons become inactive and stop learning.

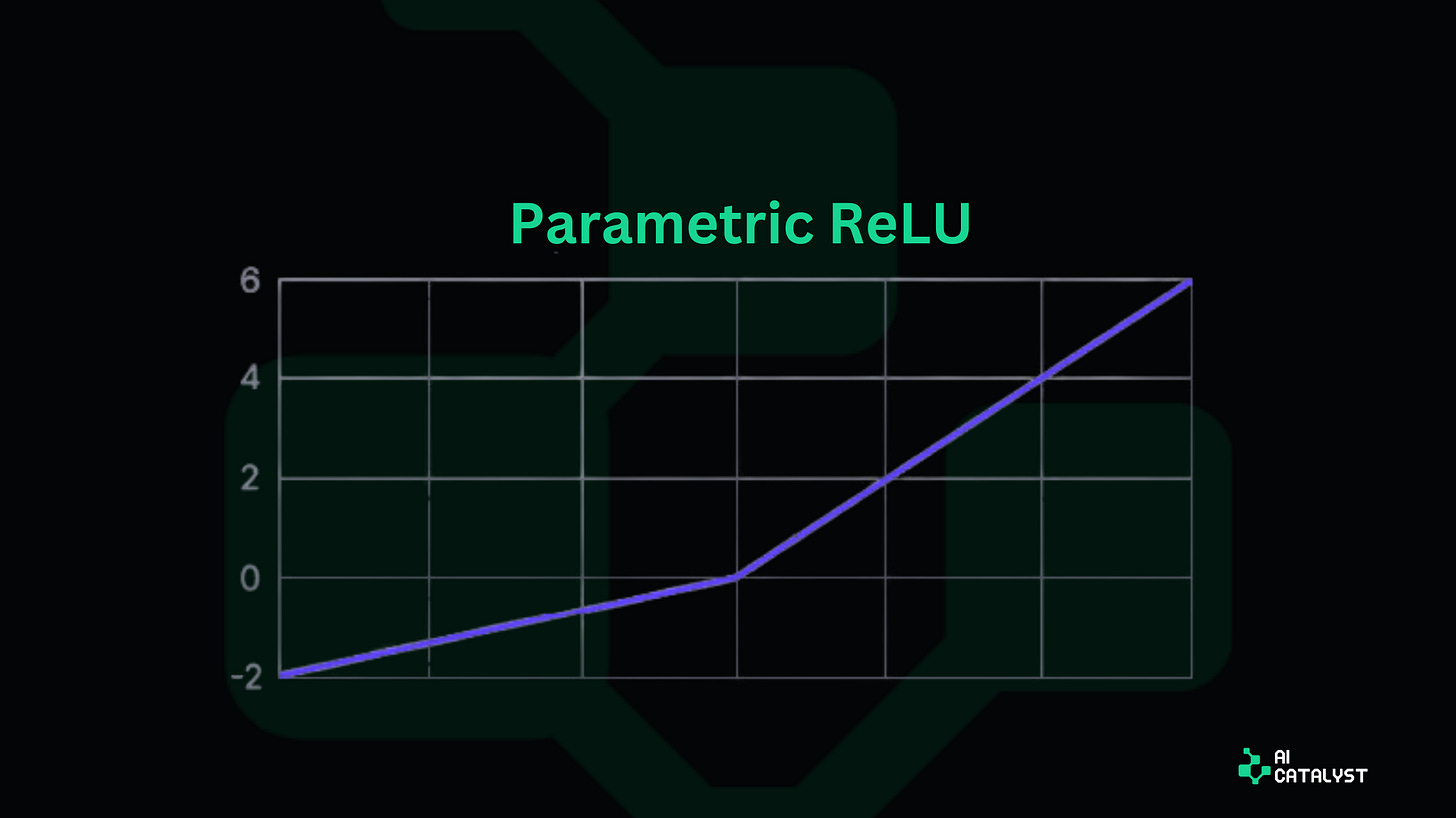

The Adaptive Decision-Maker (Parametric ReLU) :

PReLU works similarly like ReLU but with some important distinction.

Positive inputs or Good data gets processed and moves to the next layer

Negative inputs or less useful data, is not ignored completely. Instead, the ANN, allows some of the negative inputs to pass through, but is not with greater importance.

At each ANN layer, PReLU has adjustable filters which filter "Less Useful" data to pass through.

Over the period, the ANN learns and PReLu figures the best filter settings for each "Neuron" in the network.

Like CI/CD systems, the ANN learns from previous layers and adjusts its settings to process better.

Why is this helpful in neural networks?

Each "Neuron" in the network can have its optimal way of handling less useful information.

This flexibility helps the neural network learn more efficiently and potentially perform better than networks with fixed rules.

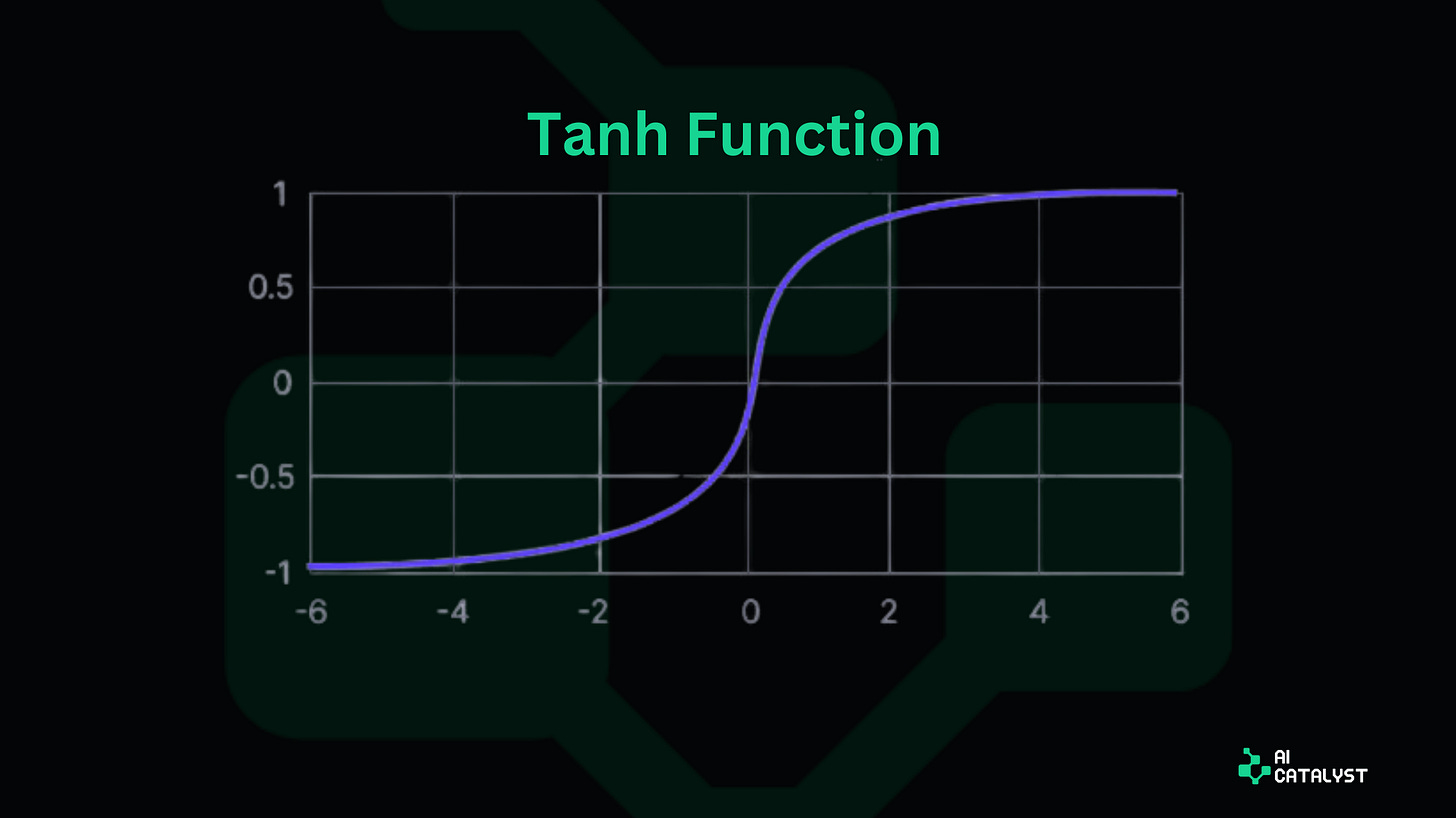

The Balanced Decision-Maker (Tanh):

This function instead of just pass or fail, assigns a specific rating between -1 to +1.

Positive changes get positive scores and negative changes get negative scores.

It treats both good and bad changes equally, just in opposite directions.

In the neural network:

Good information (positive inputs) gets a positive score, moving the network towards a certain decision.

Bad information (negative inputs) gets a negative score, moving the network away from that decision.

Neutral information gets a score close to zero, not influencing the decision much.

This is helpful because:

The network can tell the difference between good, bad, and neutral information.

It helps keep the information balanced as it moves through the network, making learning more stable.

It works well when your data has both strongly positive and strongly negative aspects.

However, there's a catch:

For very strong positive or negative inputs, the scores might not change much. This is like a pipeline that becomes less sensitive to extreme changes, which can slow down learning in very deep networks.

In simple terms, Tanh is like a fair and balanced function in a neural network that carefully weighs both positive and negative information, helping the network make more nuanced decisions. This balanced approach is useful for tasks where both positive and negative distinctions are important.

Let's wrap with an overall summary of the Activation function concerning CI/CD pipeline strategies.

Summary: Activation Functions as CI/CD Pipeline Strategies

Step Function - The Binary Pipeline: Strict pass/fail criteria.

Sigmoid - The Cautious Pipeline: Gradual decision curve between 0 and 1.

ReLU - The Optimistic Pipeline: Fast-tracks positive inputs, and blocks negatives.

Leaky ReLU - The Forgiving Pipeline: Allows small negative inputs to pass.

Parametric ReLU - The Adaptive Pipeline: Self-improves based on data patterns.

Tanh - The Balanced Pipeline: Evaluates both positive and negative impacts equally.

Choosing the right activation function, like selecting the appropriate CI/CD strategy, depends on your specific problem and data characteristics. Each function has its strengths and ideal use cases in neural network architectures.

Real-World Applications :

ReLU is often used in convolutional neural networks for image recognition tasks.

Sigmoid is common in binary classification problems, like spam detection.

Tanh is useful in recurrent neural networks for natural language processing.

Conclusion :

Understanding activation functions is key to building effective neural networks. Like optimizing a CI/CD pipeline, selecting the right activation function can significantly impact your model's performance and learning capability. We encourage you to experiment with these functions in your projects and observe their effects on your model's behaviour and results.