Amazon Prime Video saved 90% on infrastructure. By doing the one thing every architect says never to do. They deleted their microservices. Built a monolith instead. Here’s why it worked and what it means for your K8s bill.

Amazon Prime Video just saved 90% on infrastructure costs.

- Naveen

01. THE SYMPTOM

Prime Video’s video quality monitoring service had a problem that every DevOps engineer fears:

Infrastructure costs were rising faster than traffic.

Sound familiar? It usually looks like this:

You add autoscaling. Costs go up.

You optimize containers. The bill stays high.

You monitor everything. Nothing improves.

Your dashboard shows “Perfect Health” metrics: ✓ Pods are scaling correctly ✓CPU usage looks reasonable ✓ Error rates are at zero

But the AWS bill keeps climbing anyway.

This is the “Silent Leak”, which refers to the gap between a system that is working and one that is cost-effective.Prime Video hit this wall at massive scale, but the pattern appears much earlier. If you’re running distributed services on Kubernetes and your cloud bill grows faster than your user base, you are seeing the same symptom.

The question isn’t: “Is my system working?” The question is: “Is my architecture actually matched to my workload?”

For Prime Video, the answer was a flat no.

02. THE “BEST PRACTICE BLUEPRINT

They built the system exactly how the textbooks tell you to: as a highly decoupled, event-driven microservices architecture.

The workflow looked like a game of data ping-pong:

S3: Video is uploaded.

Lambda 1: Pulls video from S3 → detects defects → writes back to S3.

Step Functions: Triggers the next stage.

Lambda 2: Pulls results from S3 → processes them → Writes back to S3.

Step Functions: Orchestrates the final handoff.

The Loop: Pull → Process →Write → Repeat.

Why did they build it this way? Because it checked every “Modern Architect” box:

Serverless? ✓ (No servers to manage!)

Microservices? ✓ (Fully decoupled!)

Event-driven? ✓ (Reactive and scalable!)

Cloud-Native? ✓ (The AWS “Gold Standard”!)

And it worked. Technically, it was a masterpiece:

It scaled to zero when not in use.

Each component was isolated (a failure in Lambda 1 didn’t kill Lambda 2).

Everything was managed as clean, version-controlled Infrastructure as Code.

By every standard architectural metric, this was a massive success.

Except for one: The cost.

03. THE COST BREAKDOWN

Here is exactly where “working” became “expensive.” Prime Video discovered four distinct “hidden taxes” that were draining their budget:

PROBLEM 1: The S3 “Middleman” Tax

In a microservices world, services don’t talk to each other; they talk to storage.

The Flow: S3 → Lambda (download) → Process →Upload back to S3.

The Cost: It wasn’t just the data transfer; it was the thousands of Tier-1 API calls (PUT/GET).

At Prime Video’s scale, moving 10GB of video through multiple Lambdas meant 40GB of movement per video.

The Result: Millions per year just for “passing the salt” between services.

PROBLEM 2: Paying the “Traffic Cop” (Orchestration)

Step Functions are great for visibility, but they charge for every “State Transition.”

The Bill: $0.025 per 1,000 transitions.

The Scale: For a video stream with multiple checks per second, they were hitting account limits and burning cash just to tell the next Lambda to “start.”

PROBLEM 3: The 15-Minute Wall

AWS Lambda has a hard 15-minute timeout.

The Workaround: For long videos, they had to split files into chunks.

The Hidden Cost: Splitting meant more S3 calls, more orchestration, and more complexity. The solution to the limitation was more expensive than the task itself.

PROBLEM 4: The “Zombie” Polling Tax

When services are decoupled, they spend a lot of time asking: “Are you done yet?” Whether it’s a Lambda waiting for an S3 event or Step Functions checking a status, you are paying for compute time that is doing absolutely nothing.

THE KUBERNETES EQUIVALENT

You might not be using Step Functions, but if you’re running microservices on Kubernetes, you’re likely paying the same “Distributed Tax”:

The Translator (Serialization): Imagine two people who speak different languages. Every time they talk, they need a translator to rewrite every sentence. In microservices, your CPU is that translator which is constantly burning cycles to turn data into a format (like JSON) that can travel over the network.

The Guard (Sidecar): This is like having a security guard follow every single employee (your app pods) everywhere they go. Even if the employee is just sitting at their desk, you are still paying for the guard’s chair, desk, and salary (Memory and CPU).

The Commute (Network): Sending data between services is like driving across town to talk to a coworker. It takes time and fuel. In a monolith, that same data is in the “same room” (CPU Cache), making the conversation 100x faster and completely free.

At Prime Video’s scale, this was unsustainable. At your scale, it’s likely your biggest source of waste.

04. THE “BEST PRACTICE” TRAP

How did a team of world-class engineers at Amazon end up with a million-dollar bill for a “simple” monitoring service?

They followed the rules.

(Hypothetically) They saw Netflix’s success with microservices. They read the AWS whitepapers on “Serverless First.” They attended the keynote talks on event-driven design. They did exactly what “Best Practices” dictated.

The Problem: Netflix’s scale ≠ Prime Video’s workload.

Netflix: Billions of global requests across thousands of distinct services.They need microservices to prevent their organization from collapsing.

Prime Video Monitoring: A high-intensity, focused workload processing a specific set of videos.

In tech, we are constantly sold the idea that:

“Microservices are modern.”

“Serverless is the future.”

“Distributed systems are the only way to scale.”

The result? Teams build distributed systems for workloads that would run better (and 90% cheaper) on a single large instance.

THE KUBERNETES VERSION

We see this every day in the K8s ecosystem. You have 5–10 services and a team of 5 engineers, but your cluster looks like a Google data center:

The Service Mesh: Installing Istio for “security” (mTLS) when your internal traffic is already safe. You’re paying for a massive engine just to move the car an inch.

The Over-Observer: Adding complex Distributed Tracing (Jaeger) because “observability is key,” even though you could just check a single log file to find the error.

The Pipeline Overload: A 20-stage CI/CD pipeline for a service that only deploys once a week. You’ve built a factory for a product you barely ship.

The Anti-Pattern: Adopting solutions for problems you don’t actually have yet.

→ You aren’t Netflix. → You don’t have 500 engineers.

When you adopt “Netflix-scale” tooling for a “Startup-scale” problem, you don’t get scalability → you just get a $2,000–$5,000/month “Complexity Tax” on your AWS bill.

05. BACK TO BASICS

Prime Video didn’t try to “fix” the microservices. They deleted them.

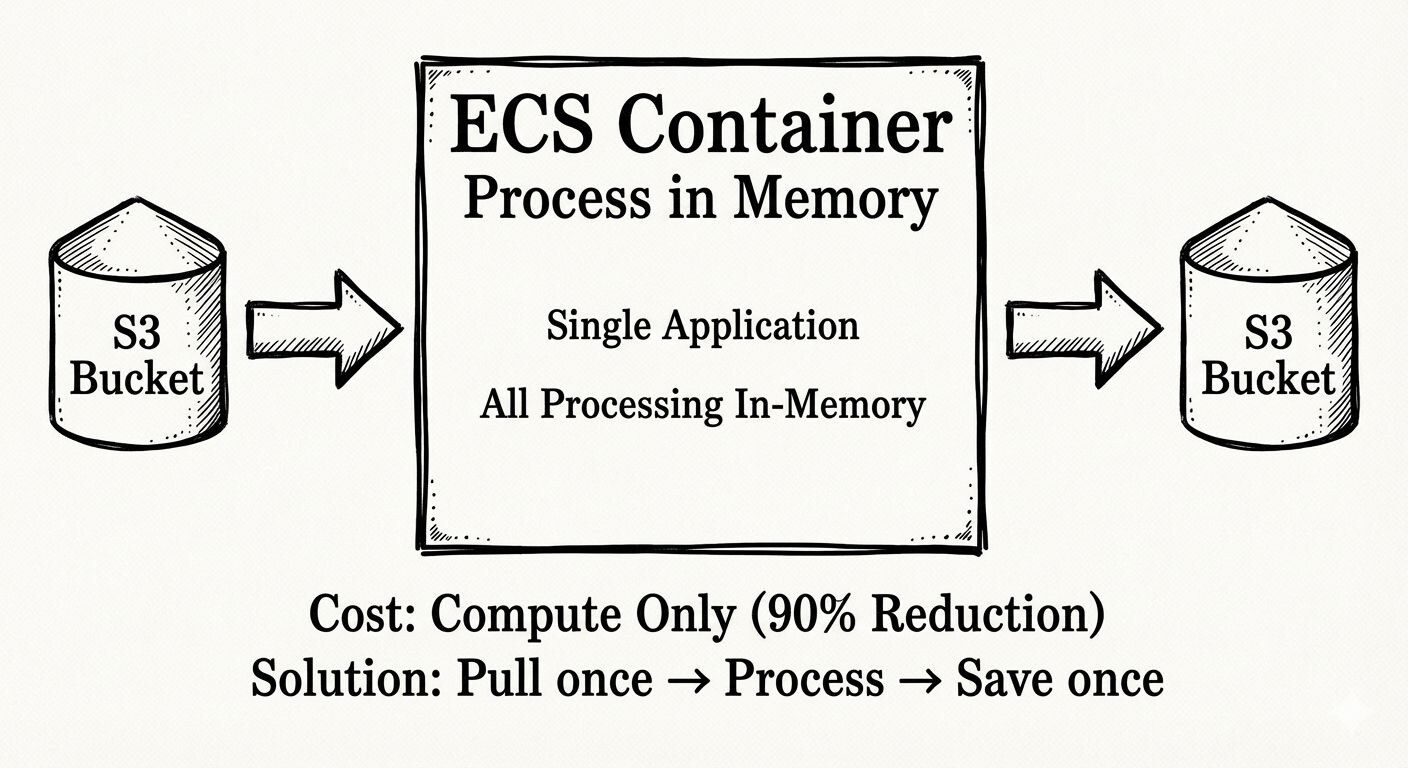

They rebuilt the entire workflow as a single application (they called it a “distributed monolith”) running on Amazon ECS. The new architecture followed the simplest path possible:

Pull the video from S3 once.

Process everything in-memory.

Write the final results once.

Done.

By moving everything into a single process, they eliminated the “S3 Ping-Pong,” the Step Functions orchestration fees, and the 15-minute Lambda wall.

The Result: 90% Discount

It wasn’t just a cost-win. By going “backwards” to a monolith-style architecture, they gained:

Performance: In-memory data transfer is orders of magnitude faster than network calls.

Operational Sanity: One service to monitor, one codebase to debug, and one deployment pipeline to manage.

Scale: They could now handle 2-hour movies as easily as 30-second clips.

THE LESSON FOR KUBERNETES

You don’t necessarily need to move to a monolith. You need to match your architecture to your data flow. In the Kubernetes world, we often split services because of “logic,” but we forget about “Data Gravity.” If two services are constantly passing massive amounts of data back and forth, they probably shouldn’t be two services.

The Goal: Minimize the “Network Tax”. If your pods are spending more time talking to each other than doing actual work, it’s time to merge them.

06. THE SCALE FRAMEWORK

The biggest mistake in modern engineering is architecting for the problems you hope to have, rather than the ones you actually have.

To avoid the Prime Video trap, you need to match your complexity to your headcount and your traffic. Here is a baseline for staying “BeyondOps” (efficient and lean):

The Startup Stage (3-10 Services): Stick to a Monolith or a few “Macro-services.” One cluster, one database. Focus on speed, not isolation.

The Growth Stage (10-50 Services): Moderate microservices. Start being “resource-aware” → this is where you begin monitoring inter-service costs.

The Enterprise Stage (50+ Services): True distributed systems. This is the only stage where a Service Mesh or complex orchestration pays for itself.

The Rule: Don’t build for Netflix scale when you’re at “Series A” scale.

Specific Consolidation Targets

If you suspect you’ve over-engineered, look for these “Red Flags.” These are your best candidates for merging:

The “Coupled” Pair: Services that always have to be deployed at the exact same time.

The “Data Twins”: Services that share 80%+ of the same data or database tables.

The “Chatty” Neighbors: Services that communicate via synchronous calls (REST/gRPC) constantly just to complete a single task.

Single Ownership: If one team owns five microservices, they are likely just managing five times the overhead for no reason.

Consolidation = Fewer Pods = Less Networking = Lower AWS Bill.

07. THE 30-MINUTE COST AUDIT

Audit your services for consolidation opportunities.

STEP 1: List your services (5 minutes)Grab your top 10 most expensive services from your AWS bill or Kubernetes dashboard.

STEP 2: For each service, ask: (10 minutes)

Does it deploy independently?

Does it scale independently?

Does it need to exist separately?

STEP 3: Identify your candidates (5 minutes)

Look for: ✓ Services always deployed together ✓ Services with synchronized deploys ✓ Services sharing 80%+ of data ✓ Services with <100 requests/day between them

STEP 4: Calculate potential savings (10 minutes)

Per service consolidated:

Fewer pods: $20-50/month

Less networking: $10-30/month

Simpler ops: Time saved

Total ROI: 30 minutes of audit, potential savings: $200-500/month per consolidated service.

Try it this week.

08. KEY TAKEAWAY

Architecture is a cost decision, not just a technical one.

Prime Video’s “modern” architecture was expensive because it didn’t match their workload. Your Kubernetes setup might be doing the same thing.

The question isn’t “What’s the best architecture?” It’s “What’s the right architecture for MY scale, MY team, and MY budget?”

Netflix’s architecture works for Netflix. Your architecture should work for you.

Sometimes that means fewer services, not more. Sometimes that means simpler, not “cloud-native.”

Match the solution to the problem you actually have, not the one you saw in a conference talk.

WHY I’M DOING THIS

I’ve spent 13 years living in production infra, dealing with Kubernetes and cloud infrastructure.

Over that time, I’ve seen a frustrating pattern: engineers are being pushed toward “best practices” that actually make their lives harder and the bills higher.

I’m starting this newsletter to cut through that noise. My goal is to share what actually works in the real world and not just what looks good on a conference slide. I want to help you build infrastructure that is lean, cost-effective, and doesn’t keep you up at 2 AM.

Let’s talk: If you found this useful, hit reply and let me know: What is currently the biggest “complexity tax” in your stack? I read and reply to every message.

Spread the word: If you think your team or colleagues are over-paying for complexity, please share this post. I need your support to get these insights to more engineers and leaders.

See you next week,

-Naveen

Thanks for reading BeyondOps Newsletter! This post is public so feel free to share it.