Back propagation - The backbone of Neural Networks!

Neural Networks mimic human brains, using interconnected layers of Neurons to process information.

Like Human brains which are interconnected by cells, which pass information to the next, Artificial Neural networks are interconnected by multiple layers of Neurons. Let’s explore.

Neural Networks consist of three layers of interconnected Neurons.

Input Layer - Gateway for data

Hidden Layers - Processing power

Output layer - Final output.

These Neurons are connected by "weights," which determine how strongly one neuron influences another - just like how some memories or experiences have a stronger impact on our decisions than others.

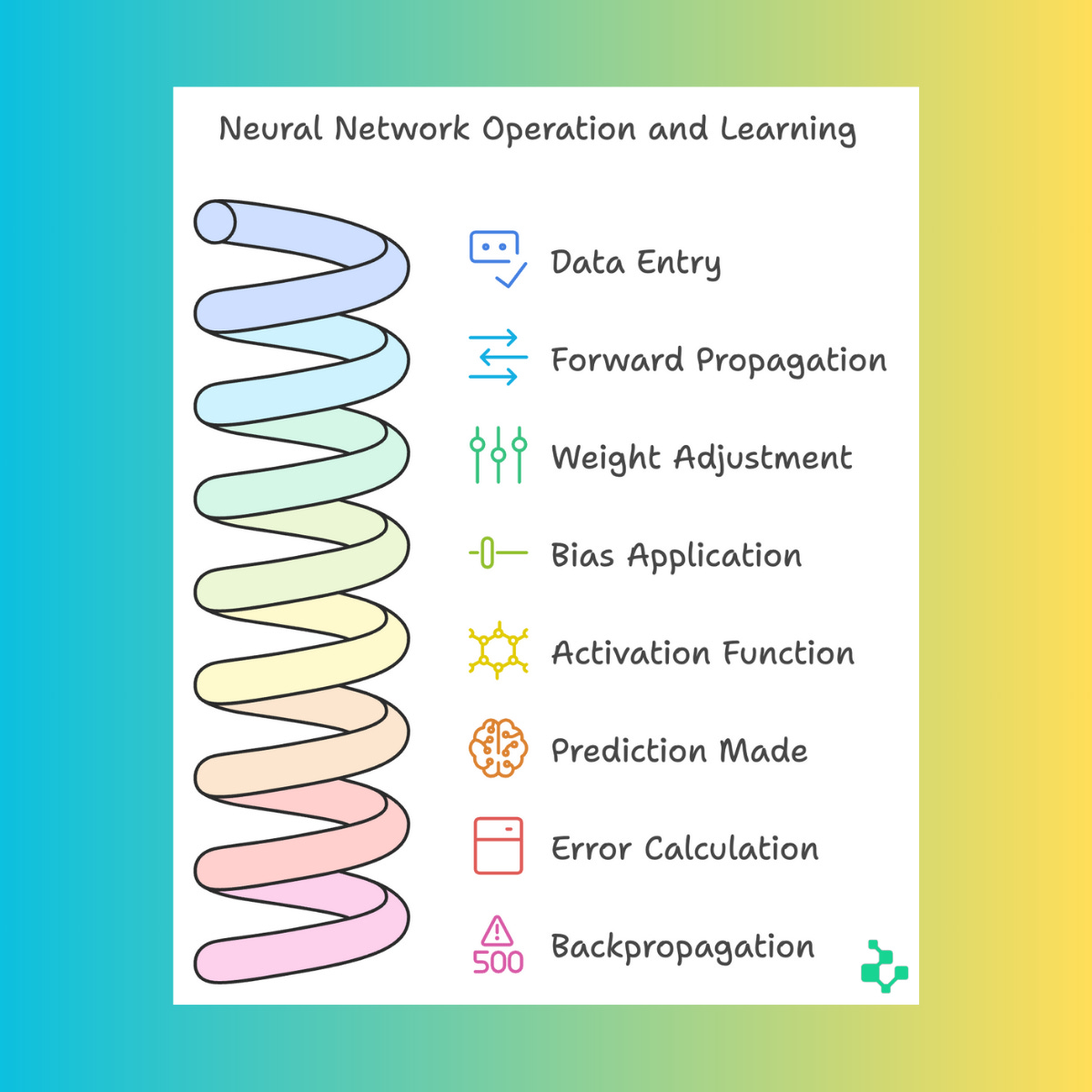

When data enters the Neural network, it travels from the input layer through the hidden layers to the output layer. This journey is called forward propagation. During this journey, each neuron transforms the data based on the weights, biases and activation functions.

Weights:

Imagine you're deciding where to go for dinner. Different factors influence your decision, right? The taste of the food might be very important (high weight), while the colour of the restaurant's walls might not matter much (low weight).

In a neural network, weights work the same way - they determine how much each piece of information matters in making a decision.

Biases:

Think of bias as your personal preferences. You don't want to go to a restaurant unless it has at least a 4-star rating - that's your threshold.

Similarly, in a neural network, bias is like a minimum threshold that needs to be met before a neuron becomes active and passes on information.

Activation Functions:

Picture a light switch with a dimmer. A regular on/off switch is linear - it's either on or off. But a dimmer allows for many levels of brightness.

Activation functions are like dimmers for Neurons. They allow for a range of outputs, not just "yes" or "no," which helps the network understand and represent complex, nuanced information - just like how we perceive the world in shades of grey, not just black and white.

Now, here's the interesting part. Imagine you're teaching a child to recognize animals. You show them a picture of a cat, but they say "dog." That's when you correct them, right? Neural networks learn similarly through a process called back propagation.

The network makes a prediction (output).

We compare this prediction to the correct answer.

The difference between the two is called the "loss" or "error." (Loss Function)

This error is then propagated backwards through the network.

The weights and biases are adjusted to minimize this error.

It's like the network is saying, "Oops, I made a mistake. Let me adjust my understanding a bit."

A Real-World Example: Teaching AI to Recognize π.

Let's say we want our neural network to recognize the symbol π. We start by showing various handwritten versions of π.

Initially, the network might confuse π with other symbols like "n" or "m".

Each time it makes a mistake, we use back propagation to adjust its internal parameters.

Over many iterations, the network learns to distinguish the unique features of π.

Eventually, it can accurately recognize π, even in messy handwriting!

This process of gradual improvement is called "gradient descent" - imagine it as the network finding its way down a hill of errors to the valley of accuracy.

Types of Neural Networks and Their Applications :

1. Feed-Forward Neural Networks:

Used for:

Optical Character Recognition (OCR): Turning handwritten notes into typed text.

Spam Detection: Keeping your inbox clean and tidy.

2. Recurrent Neural Networks:

Used for:

Sentiment Analysis: Understanding if a product review is positive or negative.

Time-Series Prediction: Forecasting stock prices or weather patterns.

Conclusion: The Power of Artificial Neural Networks

Neural networks, with their ability to learn and adapt, are powering many of the AI technologies we use daily. From the voice assistants on our phones to the recommendation systems on our favourite streaming OTT platforms, these artificial brains are already revolutionizing our world.

As we continue to refine and expand these technologies, there will be a lot of incredible features of artificial intelligence we'll achieve next. The future is indeed exciting!